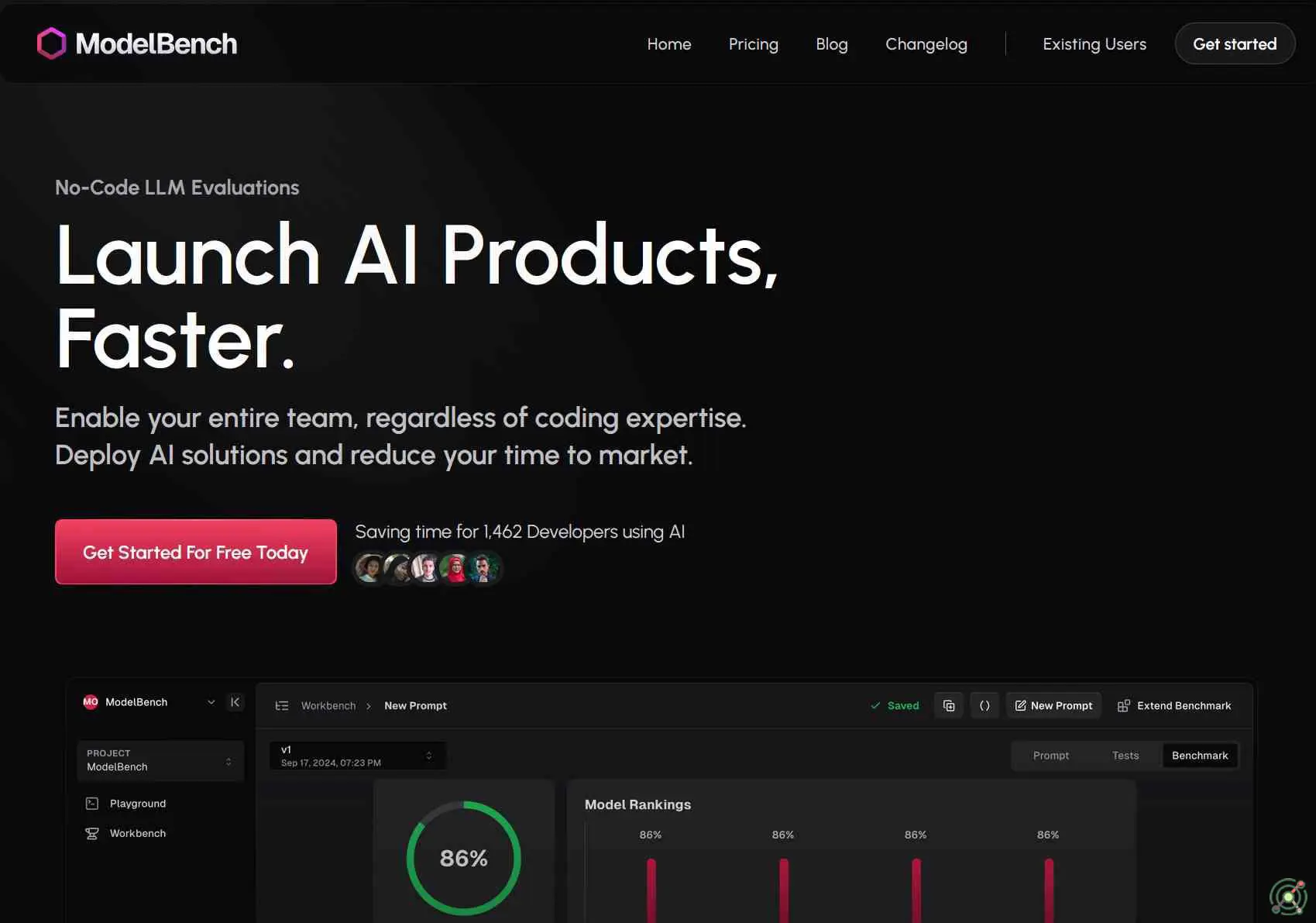

ModelBench AI

SKU: modelbench-ai

ModelBench AI is a no-code platform designed to facilitate the evaluation and comparison of more than 180 language models. It allows developers, product managers, and prompt engineers to optimize prompts, benchmark models, and trace outputs without requiring coding expertise. Key features include side-by-side model comparison, custom tool integration, prompt engineering with immediate feedback, and comprehensive benchmarking across various scenarios. ModelBench AI aims to accelerate AI development and testing processes, enhancing efficiency and collaboration within teams.

Evaluating and comparing multiple language models to identify the best fit for specific use cases.

Optimizing prompts and testing variations to enhance AI model performance.

Integrating custom tools into prompts for tailored AI solutions.

Benchmarking AI models across different scenarios to ensure robustness and reliability.

Collaborating within teams to streamline AI development and testing workflows.

ModelBench AI demonstrates high autonomy through its no-code interface enabling automated model comparisons (180+ models supported), dynamic prompt testing with variable inputs, and scalable benchmarking workflows requiring minimal human intervention post-configuration. While users must define evaluation parameters and select models/prompts initially, the platform autonomously executes multi-model testing cycles, generates performance metrics (accuracy/latency/cost), handles version control for iterative improvements (prompt versioning), and integrates evaluation tools like LLM-based scoring - significantly reducing manual effort in AI development pipelines.

Closed Source

Free