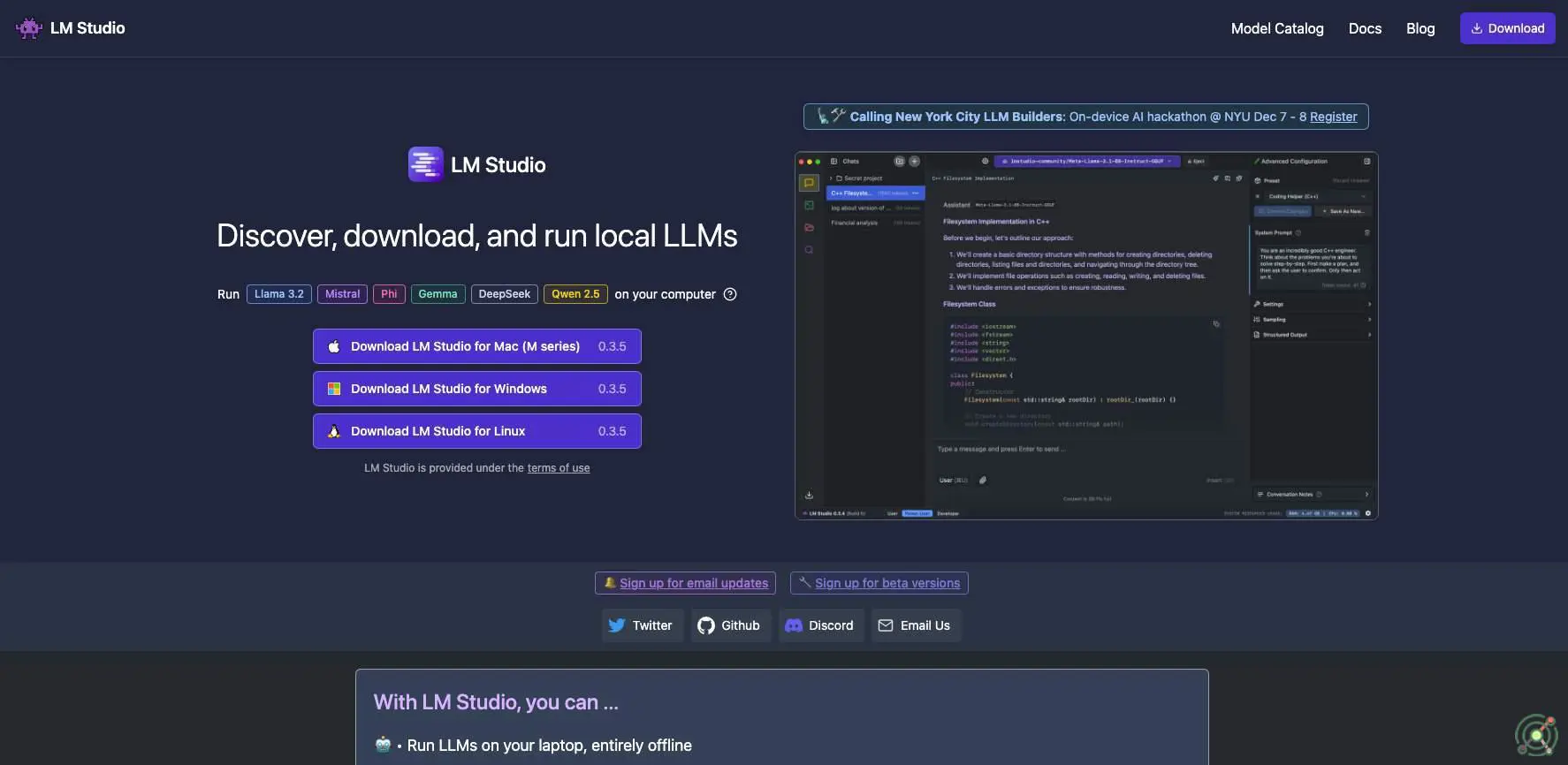

LM Studio

SKU: lm-studio

LM Studio is a user-friendly desktop application designed to facilitate the local execution of large language models (LLMs) on personal computers. Supporting macOS, Windows, and Linux, it allows users to download and run models such as Llama, Mistral, Phi, Gemma, and StarCoder directly on their devices without relying on cloud services. LM Studio offers a familiar chat interface for interacting with models and includes features like document chat, an OpenAI-compatible local server, and seamless integration with models from Hugging Face. By operating entirely offline, LM Studio ensures data privacy and security, making it an ideal solution for users seeking to leverage AI capabilities locally.

Running large language models locally without dependence on cloud services.

Maintaining data privacy by processing information directly on personal devices.

Accessing and managing multiple AI models through a unified platform.

Developing AI applications with reduced latency and improved reliability.

LM Studio enables high autonomy through offline LLM execution with local hardware control and no mandatory cloud dependencies after initial model downloads. Users retain full control over data processing, model selection (via Hugging Face integration), and inference parameters without external oversight. However, autonomy is constrained by hardware limitations (AVX2/GPU requirements), GGUF format dependencies for model compatibility (limiting third-party integrations), and licensing requirements for business use cases that introduce external governance factors.

Closed Source

Contact