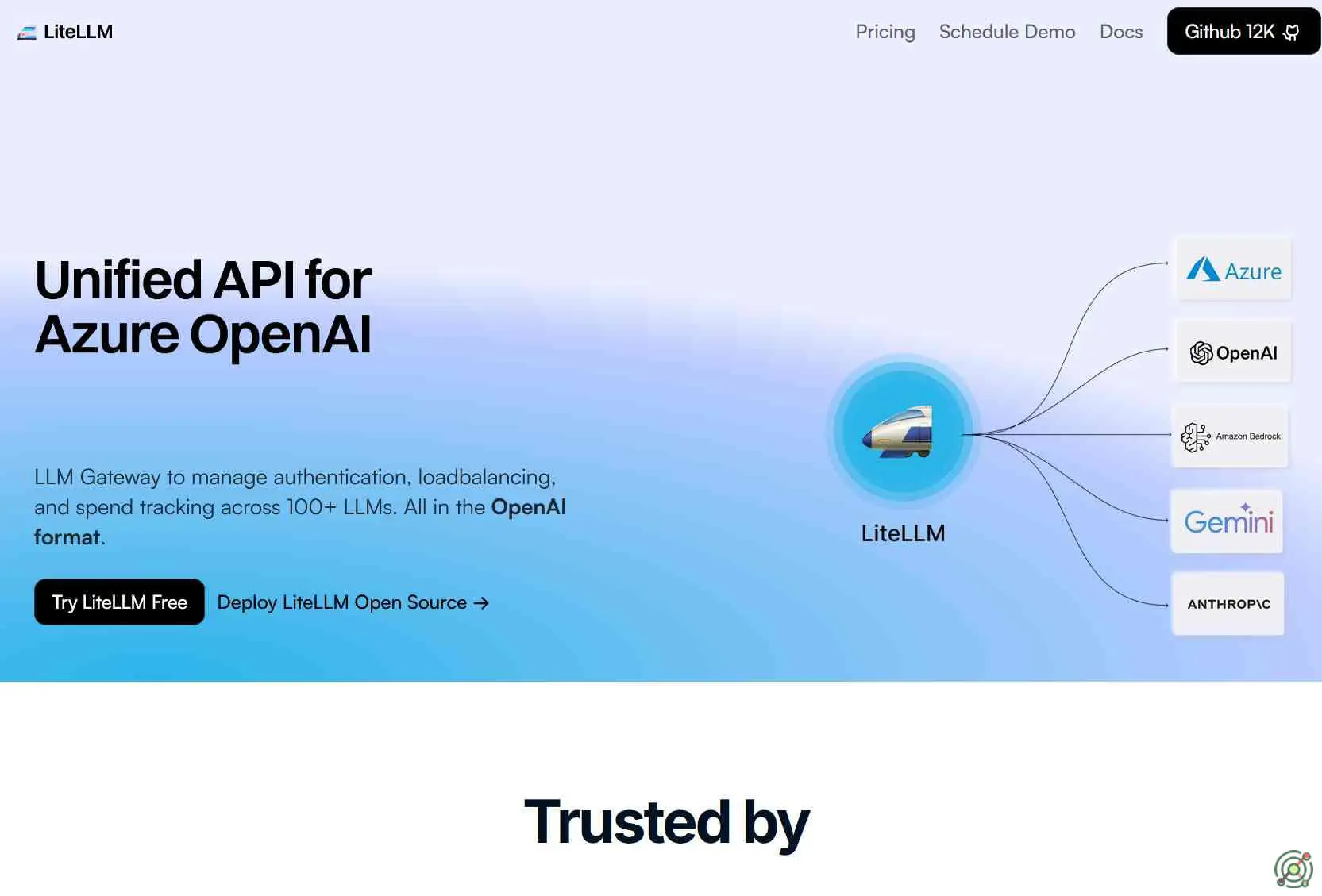

LiteLLM

SKU: litellm

LiteLLM is an open-source toolkit that streamlines interactions with over 100 large language models (LLMs) by providing a unified API in the OpenAI format. It offers both a Python SDK and a proxy server (LLM Gateway) to manage authentication, load balancing, and spend tracking across various LLM providers, including OpenAI, Azure OpenAI, Vertex AI, and Amazon Bedrock. LiteLLM supports features such as retry and fallback logic, rate limiting, and logging integrations with tools like Langfuse and OpenTelemetry. It is designed to simplify the integration of multiple LLMs into applications, ensuring consistent output formats and efficient resource management.

Developers seeking a unified interface to interact with multiple LLM providers.

Implementing load balancing and fallback mechanisms across different LLMs.

Tracking and managing API usage and spending across various projects.

Integrating LLM functionalities into applications with consistent output formats.

LiteLLM demonstrates high operational autonomy through automated API translation, load balancing, and error handling across 100+ LLMs without requiring manual intervention for routine operations. It autonomously manages retry/fallback logic across deployments and provides built-in cost tracking/budget enforcement. However, initial configuration requirements (model selection/environment setup) and dependence on external LLM providers' APIs limit full self-sufficiency.

Open Source

Free