LangWatch

SKU: langwatch

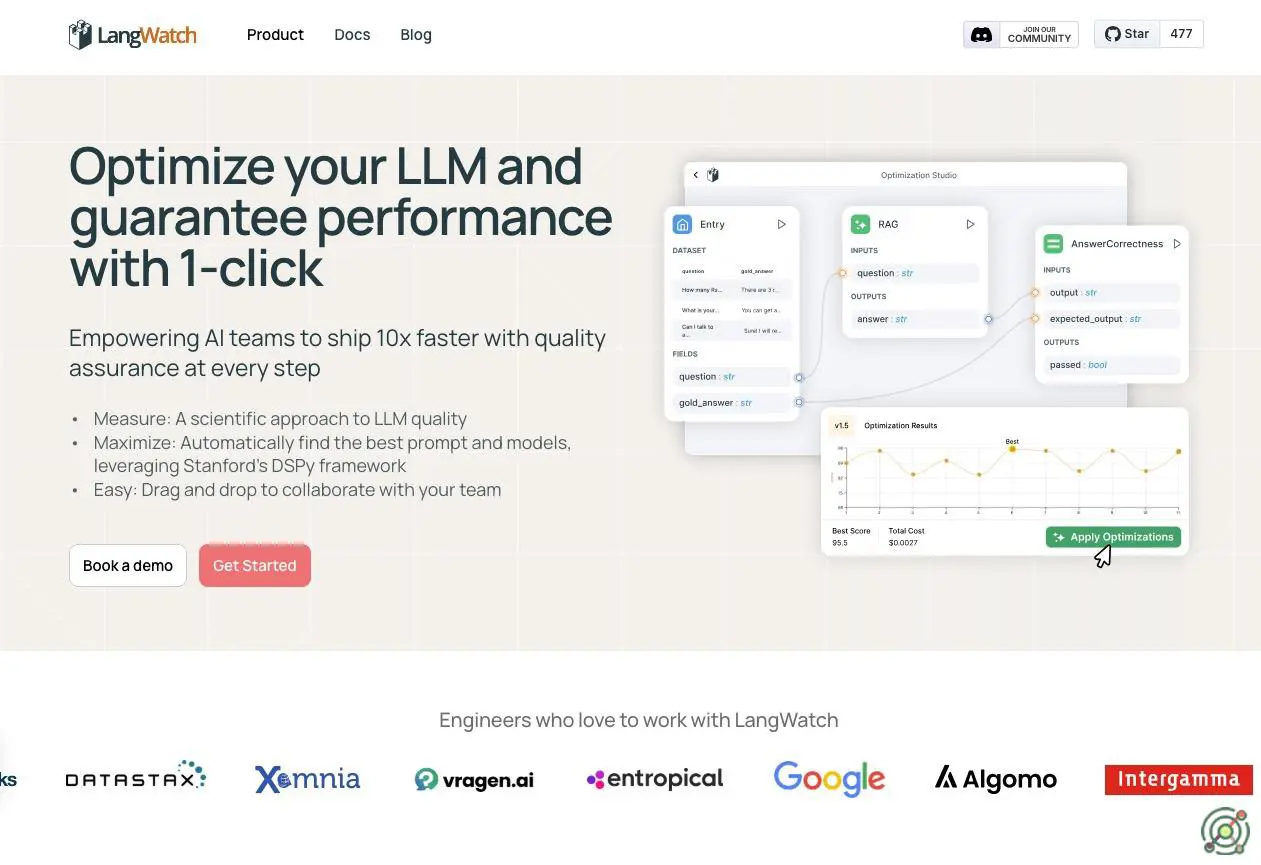

LangWatch is a comprehensive platform designed to assist AI teams in developing and deploying large language model (LLM) applications efficiently and reliably. It provides a suite of tools for monitoring, evaluating, and optimizing LLM workflows, ensuring quality and performance throughout the development process. With features like the Optimization Studio, which offers a visual interface for creating and refining LLM pipelines, and integration with frameworks such as Stanford's DSPy, LangWatch enables users to automate prompt optimization and evaluate model outputs effectively. The platform supports various LLMs, including OpenAI, Claude, Azure, Gemini, and Hugging Face, and offers easy integration into existing tech stacks, making it a versatile solution for AI development and deployment.

Monitoring and evaluating the performance of LLM applications.

Automating prompt optimization to enhance model outputs.

Ensuring quality assurance in AI development workflows.

Integrating with various LLM providers and existing tech stacks.

LangWatch demonstrates moderate autonomy through automated monitoring, real-time issue detection (e.g., jailbreaking attempts or biased outputs), and built-in guardrails that mitigate problems without human intervention. However, its autonomy is constrained by its primary role as an observability platform rather than an independent task-executing agent. While it autonomously analyzes LLM interactions and triggers alerts/actions through API integrations, core decision-making about system adjustments still requires human oversight for configuration and final implementation.

Closed Source

Paid